Talk to ChatGPT in Your CLI

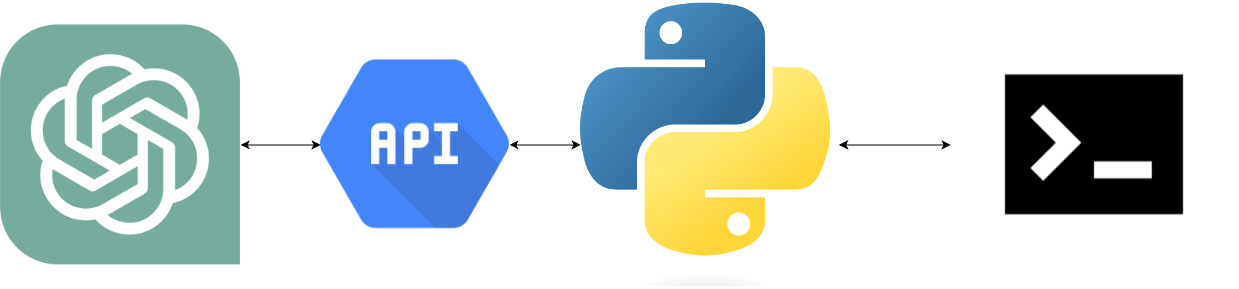

ChatGPT and other Large Language models are amazing. Many of them come with a very nice and convenient web and app interface. But many times I find myself lazy to jump into the Chat while I am coding or with an open terminal. So why not just make OpenAI’s ChatGPT model accessible through your CLI or as a Python code?

Let’s harness together OpenAPI API for that!

What do you need before we jump to the Code?

In order to make everything smooth, there are some requirements that need to be locked before we dive into the code itself.

- OpenAI ChatGPT API Key – Take a look here for a step-by-step on how to create such a key in 60 seconds.

- Python installed.

- Python libraries: requests and argparse

- OpenAI API Key configured as Environment variable

Let’s start with installing the Python libraries

pip install requests argparseNext, set the OpenAI API key in env

export OPENAI_API_KEY="YOUR_API_KEY"Implementation

So, how would we communicate with our beloved Language model? RestAPI.

This leads us to the understanding that all we need is just a nice API Interface and we are done.

Let’s do it correctly 🙂 We start by creating a RestAPIInterface class which is an abstract class. This class will serve as the core of RestAPI Communication.

The goal is to make it agnostic to the requested content.

Why? Assuming tomorrow, next month, or any time in the future the content that OpenAI expects and responses will change. We would like to have a minimum amount of code change to get back on track.

How do we achieve it then? RestAPIInterface doesn’t know what is the API Key, API Request body, or API URL… only the communication protocol.

import logging

from typing import Any, Dict

from abc import abstractmethod

import requests

class RestAPIInterface:

def __init__(selflogger: logging.Logger = logging.getLogger()) -> None:

self.logger = logger

self.name = name

def _get_headers(self) -> Dict[str, str]:

return {

"Content-Type": "application/json",

"Authorization": f"Bearer {self._get_api_key()}"

}

@abstractmethod

def _get_api_key(self) -> str:

pass

@abstractmethod

def _get_body(self, prompt_message: str) -> Any:

pass

@abstractmethod

def _get_api_url(self) -> str:

pass

def prompt(self, body: str) -> Any:

"""

raises GPTWrapperException if request failed

returns string response from chatgpt completions api

"""

retries = 2

retry_delay = 30 # seconds

for attempt in range(retries):

self.logger.debug(f'Sending prompt to API: {body}')

response = requests.post(

self._get_api_url(),

headers=self._get_headers(),

data=self._get_body(body)

)

if response.status_code == 429:

self.logger.warning(

f"429 error: Too Many Requests. Retrying in {retry_delay} seconds. Attempt {attempt + 1}.")

time.sleep(retry_delay)

continue

else:

break

try:

response.raise_for_status()

except Exception as e:

error_message = f"Request to api endpoint: {self._get_api_url()} failed.\n" \

f"Response message: {response.text}.\n" \

f"Exception caught: {e}"

self.logger.error(error_message)

raise Exception(error_message)

return self._parse_response(response.json())

Now, moving to our OpenAI ChatGPT Specific class. This class needs to implement four functions:

- _get_api_url – API URL to send requests to/

- _parse_response – post-processing down on the response that came from the server (OpenAI)

- _get_api_key – API Key for authorization

- _get_body – pre-process the textual data into a request.

from typing import Dict, Any, Union

import json

import logging

COMPLETIONS_API_URL = "https://api.openai.com/v1/chat/completions"

class GPT(RestAPIInterface):

def __init__(self, api_key: str, model: str = 'gpt-3.5-turbo',

logger: logging.Logger = logging.getLogger()) -> None:

if not api_key:

raise ValueError("api key cannot be None.")

super().__init__(logger)

self.api_url = COMPLETIONS_API_URL

self.api_key = api_key

self.model = model

def _get_api_url(self) -> str:

return self.api_url

def _parse_response(self, response: Dict[str, Any]) -> Any:

return {'response': response['choices'][0]['message']['content']}

def _get_api_key(self) -> str:

return self.api_key

def _get_body(self, prompt_message: str) -> Any:

return json.dumps({

"model": self.model,

"messages": [

{"role": "user",

"content": prompt_message}

]

})

Putting it all together, we do the following:

- Read OpenAI API Key from the env variable

- Create GPT Object

- Send messages to our ChatGPT

import os

from gpt import GPT

api_key = os.getenv('OPENAI_API_KEY', None)

if api_key is None:

raise Exception("Please set OPENAI_API_KEY to use ChatGPT")

interface = GPT(api_key=api_key)

interface.prompt("Which fruit is the healthiest?")Wow! We made it, managing to interact with ChatGPT through our code.

But wait, I promised you the CLI, right?

CLI Integration

Two options come to mind. The first option, we wrap this code with a main.py and run it from the CLI

import os

import argparse

from gpt import GPT

def main():

parser = argparse.ArgumentParser(description='ChatGPT In your CLI')

parser.add_argument('-p', '--prompt', type=str, help="prompt for ChatGPT")

args = vars(parser.parse_args()).add_argument('-p', '--prompt', type=str, help="prompt for ChatGPT")

args = vars(parser.parse_args())

api_key = os.getenv('OPENAI_API_KEY', None)

if api_key is None:

raise Exception("Please set OPENAI_API_KEY to use ChatGPT")

interface = GPT(api_key=api_key)

print(interface.prompt(args['prompt']))

if __name__ == '__main__':

main()python main.py -p "Which fruit is the healthiest?"The second option is a bit more work, but much better in terms of user convenience, make it a library and use it with a command. Let’s add a setup.py file in our project folder:

from setuptools import setup, find_packages

from importlib import util

import os

version = '1.0.0'

setup(

name='ChatCLI',

license="Apache License 2.0",

version=version,

author='MachineLeaningEngineeringPlace',

description='ChatGPT In Your CLI',

url='https://mlengineeringplace.com',

packages=find_packages(exclude=["cli*", "version*", "main*"]),

install_requires=[

'requests',

'argparse',

],

entry_points={

"console_scripts": [

"chat=chatcli.main:main",

"chatcli=chatcli.main:main",

"askme=chatcli.main:main",

]

},

)Install the library – enter the terminal in the project directory and run:

pip install ../chatcliThats it – use it:

chatcli -p "your question"

or

askme -p "your question"

or

chat -p "your question"Conclusion

So what did we learn today? We implemented from scratch our own ChatGPT Python and CLI Interface, allowing us to ask any question directly from the CLI.

What’s next?

Did you hear about Pandas AI – it also uses OpenAI chatGPT, so take a look, press here