AutoML-GPT: Automatic Machine Learning with GPT

Automatic Machine Learning, or AutoML, is a rapidly evolving field that aims to automate the end-to-end process of applying machine learning to real-world problems. AutoML promises to make machine learning more accessible to non-experts and improve the efficiency of experts. It covers various aspects of machine learning, such as data preprocessing, feature engineering, model selection, and hyperparameter tuning. But how GPT and other Large Language models (LLMs) can be integrated with AutoML?

Before diving into the Paper summarization, if you like to deepen your knowledge of the concept of AutoML in general – Click here – for a post dedicated to that.

The paper introduces AutoML-GPT, an Automatic Machine Learning (AutoML) system that leverages Large Language Models (LLMs) to automate the AI model training process.

AutoML-GPT uses LLMs as a bridge to:

1. Connect various AI models.

2. Optimizes hyperparameters

3. Trains these models dynamically based on user requests.

It takes requests and composes prompts from model and data cards, which provide detailed descriptions of the AI models and datasets.

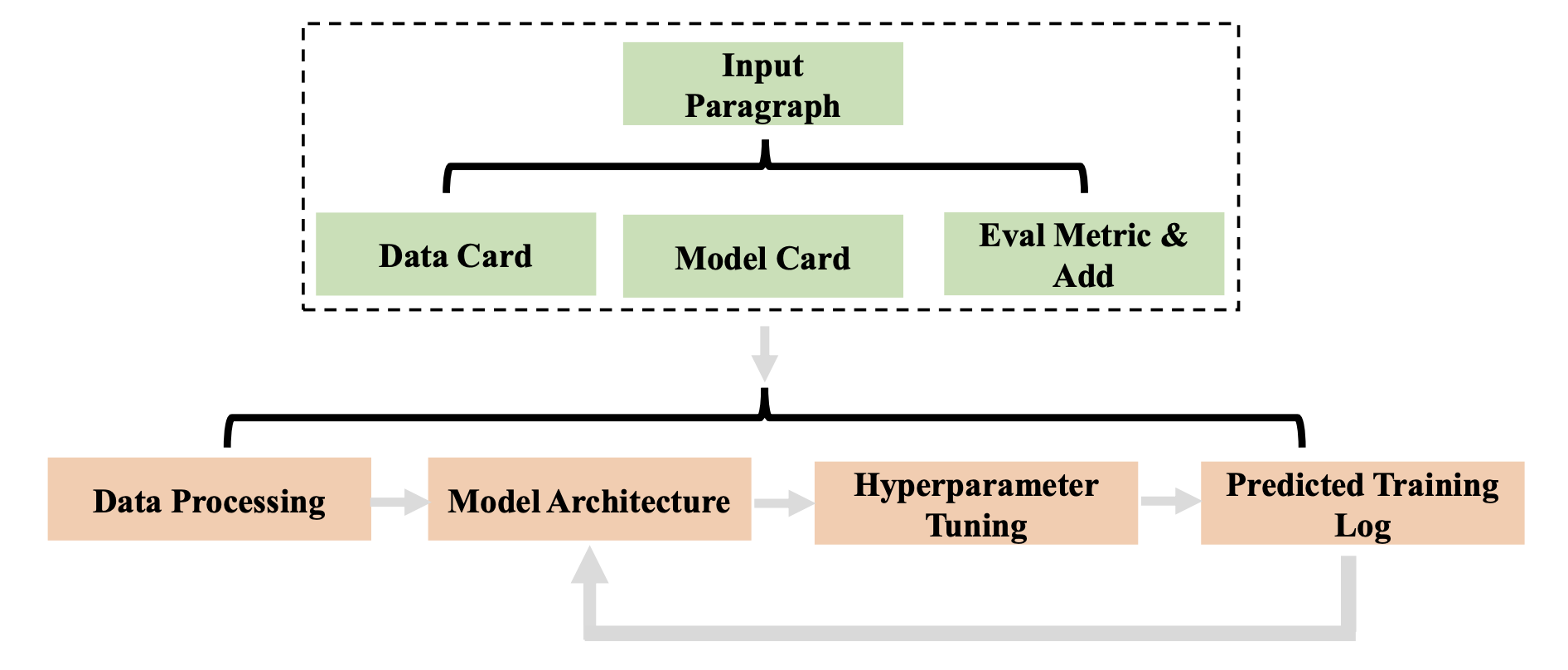

AutoML-GPT automates the entire process from data processing to model architecture design, hyperparameter tuning, and even generating predicted training logs. It can handle complex AI tasks across different domains and datasets, showing impressive results in computer vision, natural language processing, and other challenging areas.

The pipeline

The process within AutoML-GPT is divided into four stages:

1. Data Processing

AutoML-GPT processes the input data based on the specifics provided in the data cards and descriptions. Data processing techniques depend on the nature of the data and the requirements of the project.

What are those Data cards?

These cards basically serve as an inventory of the “known datasets” out there. Each data card contains a list of features such as:

1. Dataset name – ImageNet, COCO, etc’

2. Input type – Image, Text, Video, Tabular, etc’

3. Label space – Object categories, Wikipedia, Dog breeds…

4. Eval – Metric to use to evaluate the performance of a model over a given dataset.

2. Model Architecture Design

The system assigns a suitable model to each task dynamically. It uses user-provided model cards and descriptions to gain insights about the models and link them to the tasks.

Model cards?

Same to the Data cards which describe the inventory of known datasets, the model cards are the inventory of the models that can be used for the Machine learning task.

These cards contain a list of features such as:

1. Model name

2. Model structure – describes the architecture of the model, such as CNN, Transformer, Self-attention, etc’

3. Model description – a textual description of the model architecture.

4. Hyperparameters (HP) – possible HP to tune and/or best HP values.

3. Hyperparameter Tuning

AutoML-GPT finds the optimal set of hyperparameters for the given model and dataset. Instead of tuning these parameters on real machines, it predicts performance by generating a training log for the given hyperparameters, data card, and model card.

On a personal note – this step seems a bit optimistic or magic. In case one of you would like to incorporate this paper into your day-to-day work, this step can be replaced with real training, at least for a few steps/epochs. Another suggestion is to train for a few steps and only then let the LLM predict the rest of the training log.

4. Generation of Predicted Training Log

The system conducts training automatically and returns a training log. This log records various metrics and information collected during the training process, which helps in understanding the model’s progress and evaluating the effectiveness of the chosen architecture, hyperparameters, and optimization techniques. This approach enables AutoML-GPT to leverage both the power of LLMs like GPT and the expertise encapsulated in other AI models, thus achieving a high degree of generality and effectiveness across various AI tasks.

Overall AutoML-GPT pipeline

Combining all the steps described above, we get the overall AutoML-GPT pipeline:

This pipeline will repeatedly run steps 2,3, and 4 until convergence.

But, when do we converge?

This can be defined by different criteria, like other AutoML pipelines or by a human-in-the-loop.

Examples and Experiments

Now, once we understood the concept of AutoML-GPT, let’s see some examples and use cases for which this pipeline can be used.

Unseed data:

We create a new Data card for the new unseen dataset which we want to process and predict on.

Next, we let AutoML-GPT understand which data card from the already known Data cards is similar to the unseen data.

In addition, AutoML-GPT will pick models from the Models cards and start the training – or at least training logs prediction :).

Object detection

Same pipeline as before, what changes?

Only the Data card – AutoML-GPT will figure out based on the Data card that is provided, which models to choose, hyperparameters, and the entire pipeline basically.

Want to dive deeper into Recent papers and their summaries – click here

Resources and Citations: